Of course, software systems cost money to run. If you run them on-premises, you may have bought too much hardware to keep up with possible business and development needs or to cope with peak loads. In the cloud, on the other hand, there is no need to have an excessive amount of resources on hand, allowing projects and businesses to avoid this risk altogether. So can IT managers sit back and trust that they are getting the best possible value for their budget?

There are two classic situations in which too many or too large resources are provided in the cloud. The positive scenario: The company starts many new projects and is very innovative. The negative scenario: Not enough knowledge has been built up about IT operations in the cloud. This is because provisioning there takes place under different conditions than in traditional on-premises operation, where new or larger resources are only available once the operations department (“Operations”) has installed them.

In both cases, it is possible to keep costs under control in the cloud. For example, you can set up monitoring systems, involve the operations department in the provision of new resources and clean up regularly. However, to take on this new responsibility, the operations department needs to build new skills.

Furthermore, you can define a focus on costs as a requirement for DevOps projects in the company. This blog is based on a DevOps mindset, but the principles can also be applied in an organisztion where development and operations run separately.

Below we will cover horizontal and vertical scaling. Vertical scaling involves increasing or decreasing resources, whereas horizontal scaling involves adding or removing them.

The timing

If you have to deal with costs, the triggers for this can be the following:

You decide to do this from the beginning. But then you don’t know where to focus your efforts. You don’t yet have any empirical data on how resources will be used over time.

Alternatively, you can tackle this if Azure’s built-in wizard recommends it. But in this situation, Azure may lack information about how the resources are being used.

Or you only deal with it when notifications/alerts come in from Azure that a budget has been exceeded. However, this assumes that you have been able to set a realistic budget in advance. This can be difficult as even then there is a lack of empirical data on usage over time.

For us, this is done continuously as our projects mature and our clients’ focus on the costs associated with their “new” cloud solutions increases.

58% cost saving

In one of our projects in the financial sector, where we run a large part of our client’s infrastructure, we were able to clearly identify several areas of savings.

Much of its systems are based on Azure App Service, which provides almost unlimited access to IT resources. This allows us to smoothly implement new business requirements as they arise.

Once the systems have matured and clear usage patterns can be identified and predicted, we continuously analyze the capacities used compared to those provided. This allows us to identify areas where capacity adjustments are possible.

The development process for the client follows CI/CD principles, so development and test environments are needed in which all changes are load tested before they go live. In the early stages of the project, the need for availability of these environments was difficult to predict. But over time, better forecasts of resource requirements for both manual and automated testing were possible. This provided opportunities to minimize the cost of these resources.

The predictability allowed us to set an automatic adjustment to the number of instances in the app service plan for the test environment, which depended on the scheduled working time.

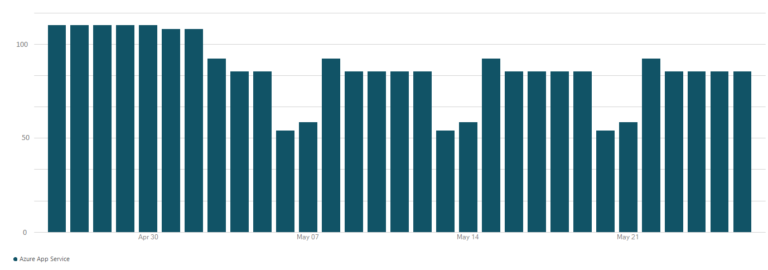

The following diagram shows the resulting savings (the scale of the Y-axis has been converted to an index). It is easy to see that the automatic adjustment had an impact from around May 3. Compared to the configuration before May 3, we achieved a saving of 28.6%.

Of course, all our projects use Azure’s built-in methods for automatically scaling resources, including the various scaling options for App Services. However, Azure does not offer automatic scaling of the App Service plan SKU (Stock Keeping Unit) provided, which ties up a larger part of the operating costs. By SKU, Azure refers to a specific configuration of an Azure product. For example, the App Service plan SKU “P2v3” is the designation for a specific “server size” with 4 cores, 16 GB RAM and 250 GB storage.

Therefore, we have developed mechanisms to shut down App Service Plan SKUs for the development environment outside of regular working hours – designed, of course, so that they can be quickly reactivated if a situation requires this outside of working hours.

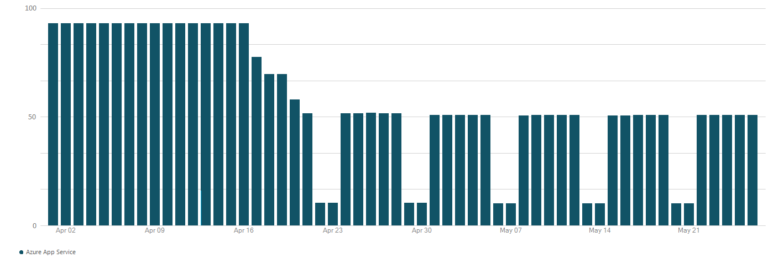

The next diagram shows the resulting savings (the scale of the Y-axis has been converted into an index). Around April 16, an automatic scaling of the SKU for the App Service plans was set up in the development environment so that a smaller SKU is used in the evening hours and on weekends. Compared to the pre-16 April configuration, we achieved a 58% saving.

Resources under the microscope

For another client, we created the backend for an extensive online shop. When the project had reached a certain maturity, we agreed with the client’s IT manager to find out the costs for their cloud resources to see if they could be reduced. Since the utilization of the resources was known, the conditions for savings were also good.

So we took a close look at the biggest items in the development, test and production environments. The focus was primarily on the most cost-intensive resources. Many resources were not prioritized because the time spent on them could not have been financed by savings in the resources concerned.

Specific savings were made in Azure SQL, API management, the App Service Plan and Data Factory.

Reserved instances were used for Azure SQL relatively early in the project (there is an option to pay a fixed, lower price for some instances and in return commit for a certain period, for example 1 year). A reservation is possible for 1 or 3 years at a time. This is a good example of a resource where you can only optimize costs if the utilization is known.

API management is shut down in the development and test environment during the hours when development or testing is not taking place.

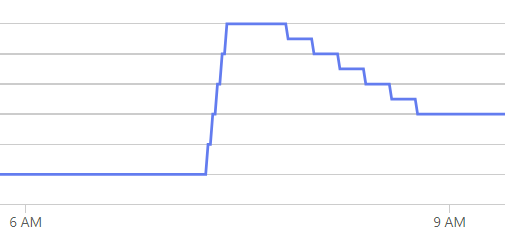

The app service plan needs to be able to scale very quickly as end customers shop online to keep up with the often large number of requests. Most customers shop between 6 AM and 4 PM Monday to Friday. There is also paging for some requests (occurs at a what can result in more, but smaller requests, thus reducing the load on the single instance. In addition, reduced versions of the responses have been created that can be used when the online shop only needs a subset of the data from the original response. Finally, we added more rules for horizontal scaling so that outside of the times when most customers are online, scaling is not as fast.

Here you can see a very fast horizontal scaling of an app service plan around 7 AM.

Data Factory can be part of the solution

Azure Data Factory can use a self-hosted integration runtime. This customer already had this for other reasons, and it is less expensive than an Azure integration runtime. Also, all Copy activities (a subtask in Data Factory that copies data) were configured in Data Factory with two Data Integration Units, so the more expensive default setting is not used. Similarly, we have configured the Data Flow activities to use 8 vCores unless it is a critical data flow that requires more. Pipelines and data flows are timed out so they no longer attempt to complete the operation if, for example, data or services are unavailable. In addition, pipelines are now scheduled to update data only during the online shop’s peak hours. And automatic monitoring ensures that the various activities in Azure Data Factory continue to have the most cost-effective configuration.

After the pipeline work for this client, it could be seen that the costs for the above resources could be reduced by 10% to 50% – a significant saving in the IT budget.

What can you do to put your solutions to the test?

Does your project or organization meet the right requirements to optimize the cost of your cloud solution? Here are some questions you should address first:

- Do you have the right skills to optimize costs?

- Do you want to move from an operations and development set-up to DevOps?

- How much money could you save on your cloud solutions without increasing time-to-market or compromising your ability to experiment with new solutions that benefit the business?

Whether there is something in it for you can often be determined in a fairly short time after taking a close look at the set-up. If you need help with this process, we will of course be happy to support you. We are just a phone call away.

*The above text is a translation of the Danish original. You can find the Danish-language version here.